TopMod, Blender, and Curved Handles

I’ve been working on a big project using Blender. More on that in a future post, but here’s a preview. The project has gotten me into a 3D art headspace, and I took a detour recently from this major project to work on a small one.

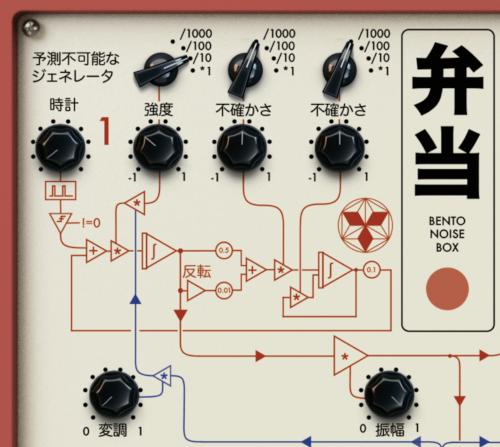

Blender isn’t my first exposure to 3D. When I was a kid, I played around a lot with POV-Ray – an outdated program even back when I was using it, but still a fun piece of history. I also found out about something called TopMod, which is an obscure research 3D modeling program. I was interested in it primarily because of Bathsheba Grossman, who used it in a series of lovely steel sculptures based on regular polyhedra.

The core idea of TopMod is that, unlike other 3D modeling programs, meshes are always valid 2-manifolds, lacking open edges and other anomalies like doubled faces. This is ensured with a data structure called the Doubly Linked Face List or DLFL. In practice, TopMod is really a quirky collection of miscellaneous modeling algorithms developed by Ergun Akleman’s grad students. These features give a distinctive look to the many sculptures and artworks made with TopMod. I identify the following as the most important:

Subdivision surfaces. TopMod implements the well-known Catmull-Clark subdivision surface algorithm, which rounds off the edges of a mesh. However, it also has a lesser known subsurf algorithm called Doo-Sabin. To my eyes, Doo-Sabin has a “mathematical” look compared to the more organic Catmull-Clark.

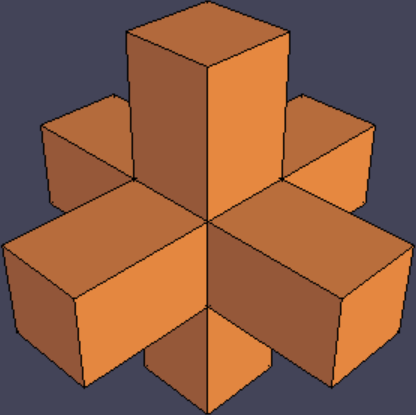

Original mesh.

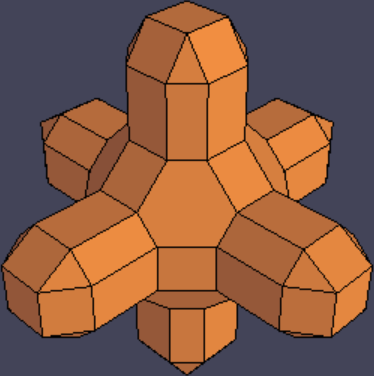

Catmull-Clark subdivision surface.

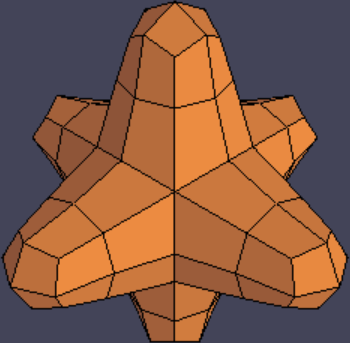

Doo-Sabin subdivision surface.

Rind modeling. This feature makes the mesh into a thin crust, and punches holes in that crust according to a selected set of faces.

Original mesh with faces selected.

After rind modeling.

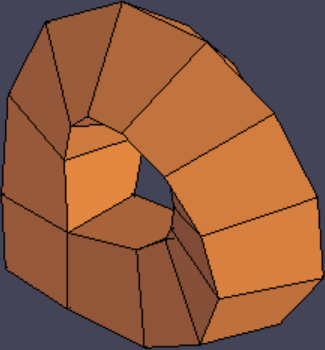

Curved handles. In this tool, the user selects two faces of the mesh, and TopMod interpolates between those two polygons while creating a loop-like trajectory that connects them. The user also picks a representative vertex from each of the two polygons. Selecting different vertices allows adding a “twist” to the handle.

Original mesh.

Handle added.

The combination of these three features, as pointed out by Akleman et al., allows creating a family of cool-looking sculptures in just a few steps:

Start with a base polyhedron, often a Platonic solid.

Add various handles.

Perform one iteration of Doo-Sabin.

Apply rind modeling, removing contiguous loops of quadrilateral faces.

Perform one or more iterations of Catmull-Clark or Doo-Sabin.

(I would like to highlight step 3 to point out that while Doo-Sabin and Catmull-Clark look similar to each other in a final, smooth mesh, they produce very different results if you start manipulating the individual polygons they produce, and the choice of Doo-Sabin is critical for the “TopMod look.”)

TopMod has other features, but this workflow and variants thereof are pretty much the reason people use TopMod. The program also has the benefit of being easy to learn and use.

The catch to all this is that, unfortunately, TopMod doesn’t seem to have much of a future. The GitHub has gone dormant and new features haven’t been added in a long time. Plus, it only seems to support Windows, and experienced users know it crashes a lot. It would be a shame if the artistic processes that TopMod pioneered were to die with the software, so I looked into ways of emulating the TopMod workflow in Blender. Let’s go feature by feature.

First we have subdivision surfaces. Blender’s Subdivision Surface modifier only supports Catmull-Clark (and a “Simple” mode that subdivides faces without actually smoothing out the mesh). However, a Doo-Sabin implementation is out there, and I can confirm that it works in Blender 3.3. An issue is that this Doo-Sabin implementation seems to produce duplicated vertices, so you have to go to edit mode and hit Mesh -> Merge -> By Distance, or you’ll get wacky results doing operations downstream. This may be fixable in the Doo-Sabin code if someone wants to take a stab at it. Also worth noting is that this implementation of Doo-Sabin is an operator, not a modifier, so it is destructive.

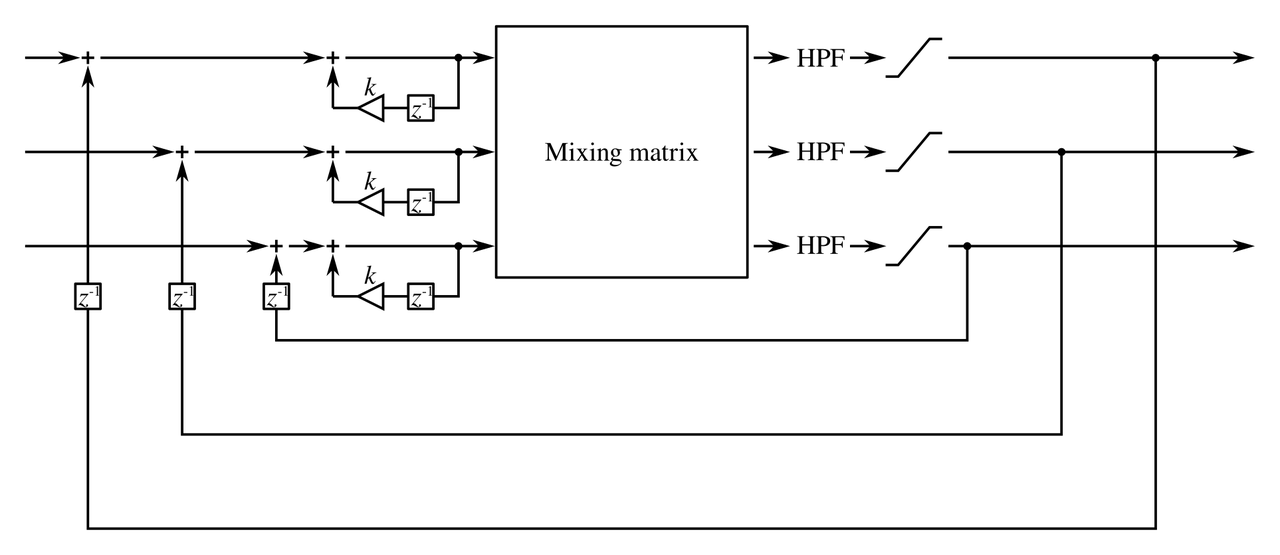

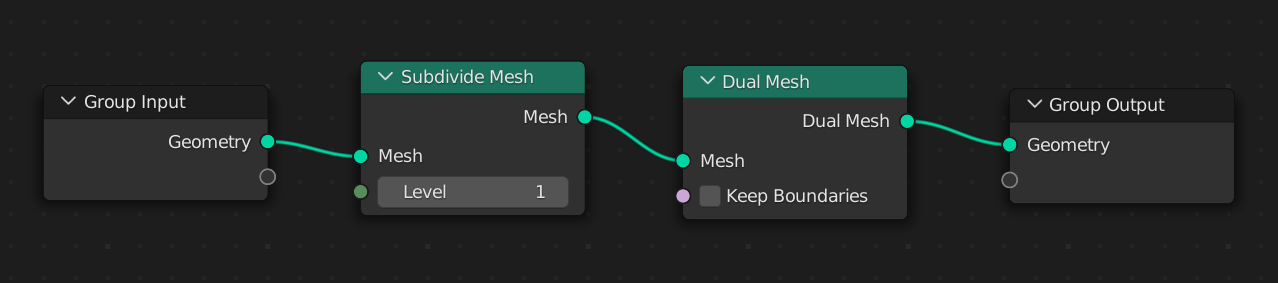

EDIT: Turns out, Doo-Sabin can be done without an addon, using Geometry Nodes! This StackExchange answer shows how, using the setup in the image below: a Subdivide Mesh (not a Subdivision Surface) followed by a Dual Mesh. The Geometry Nodes modifier can then be applied to start manipulating the individual polygons in the mesh.

Rind modeling can be accomplished by adding a Solidify modifier, entering face select mode, and simply removing the faces where you want holes punched. An advantage over TopMod is that modifiers are nondestructive, so you can create holes in a piecemeal fashion and see the effects interactively. To actually select the faces for rind modeling, TopMod has a tool to speed up the process called “Select Face Loop;” the equivalent in Blender’s edit mode is entering face select mode and holding down Alt while clicking an edge.

Curved handles have no equivalent in Blender. There is an unresolved question on the Blender StackExchange about it. To compensate for this, I spent the past few days making a new Blender addon called blender-handle. It’s a direct port of code in TopMod and is therefore under the same license as TopMod, GPL.

My tool is a little awkward to use – it requires you to select two vertices and then two faces in that order. TopMod, by comparison, requires only two well-placed clicks to create a handle. I’m open to suggestions from more experienced Blender users on how to improve the workflow. That said, this tool also has an advantage over TopMod’s equivalent: parameters can be adjusted with real-time feedback in the 3D view, instead of having to set all the parameters prior to handle creation as TopMod requires. The more immediate the feedback, the more expressive an artistic tool is.

Installation and usage instructions are available at the README. blender-handle is 100% robust software entirely devoid of bugs of any kind, and does not have a seemingly intermittent problem where sometimes face normals are inverted.

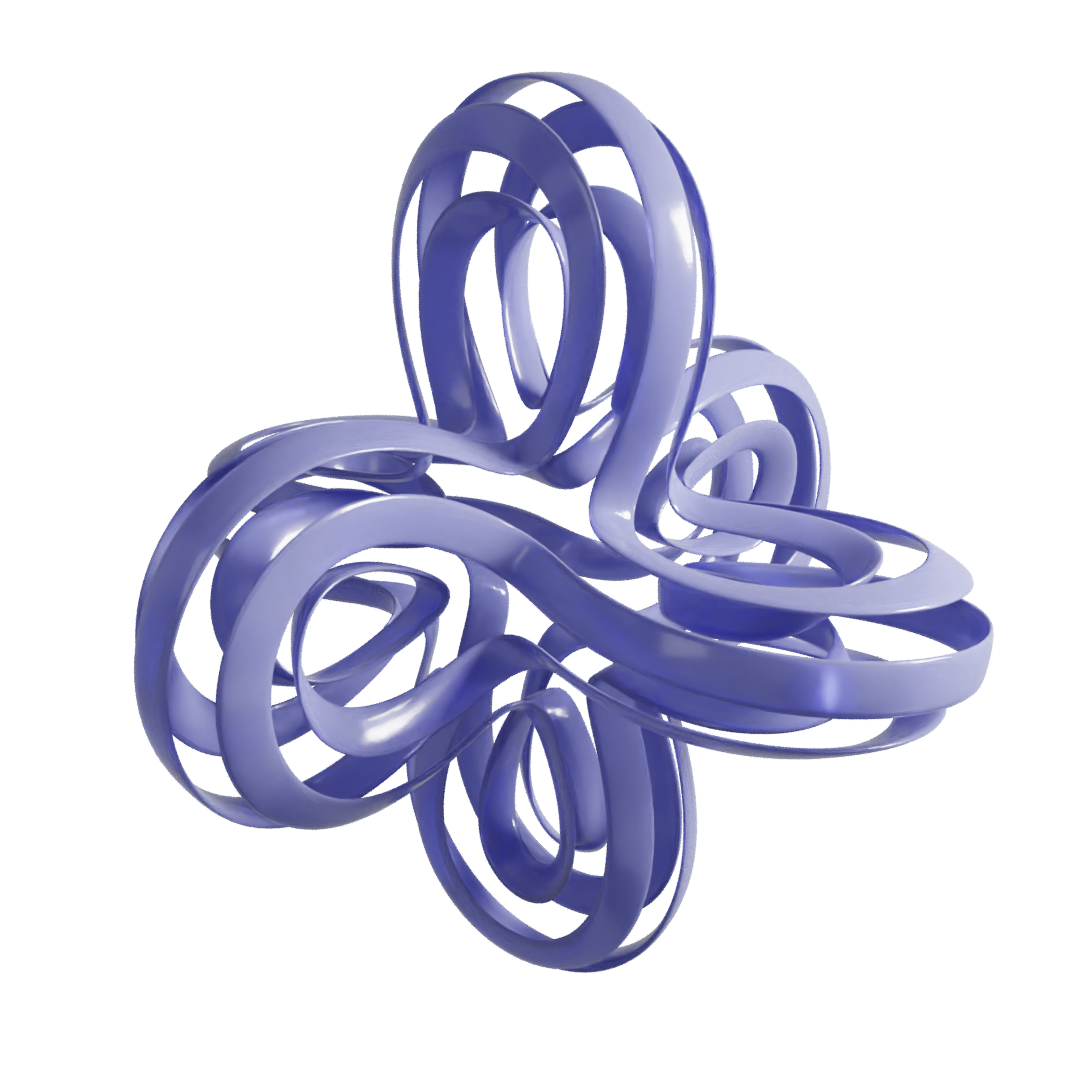

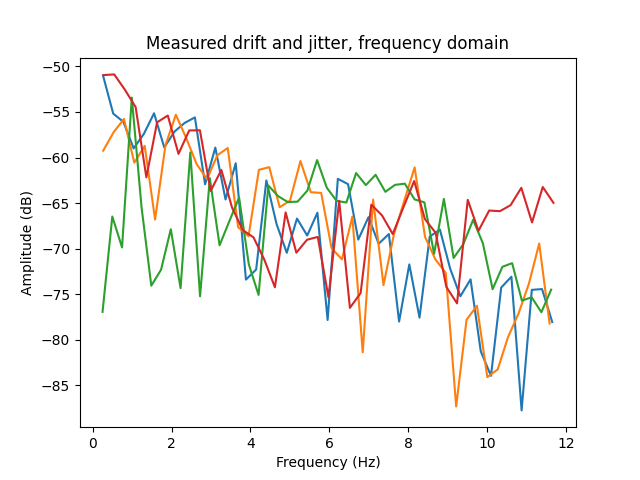

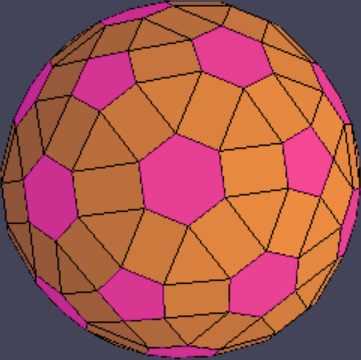

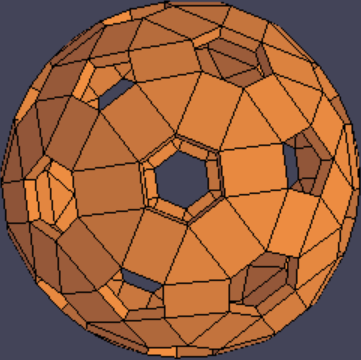

The rest of this post will be a nerdy dive into the math of the handle algorithm, so I’ll put that after the break. Enjoy this thing I made in Blender with the above methods (dodecahedron base, six handles with 72-degree twists, Doo-Sabin, rind modeling, then Catmull-Clark):